Introduction

Artificial Intelligence (AI) has transformed industries ranging from healthcare and finance to recruitment and law enforcement. However, the ethical implications of AI are a growing concern, particularly when it comes to bias in training data. While AI promises efficiency and objectivity, the reality is that biased data can reinforce and even exacerbate societal inequalities.

The Root of Bias in AI Training Data

AI systems are only as good as the data they are trained on. If this data contains historical biases or lacks diversity, the AI model will learn and replicate these issues. Some common sources of bias in training data include:

- Historical Inequities: Many datasets reflect historical discrimination. For example, training an AI model on hiring data from a male-dominated industry may lead to biased hiring recommendations that favour men.

- Sampling Bias: If the data does not represent the full diversity of the population, the AI will perform poorly for underrepresented groups. A facial recognition system trained mainly on lighter-skinned individuals will have higher error rates for darker-skinned individuals.

- Prejudiced Labelling: If human annotators hold subconscious biases, they can unknowingly influence the training data. For example, a crime prediction AI trained on biased policing records may reinforce racial profiling.

The Purpose and Mechanisms of Regression in AI Training

Regression is a fundamental statistical technique used in AI to model relationships between variables and predict outcomes. It serves as a key method for normalising data, identifying trends, and minimising errors. The goal of regression is to establish a mathematical function that best fits the training data, enabling AI to make predictions based on input variables.

Listed below are some types of Regression that are commonly employed in AI.

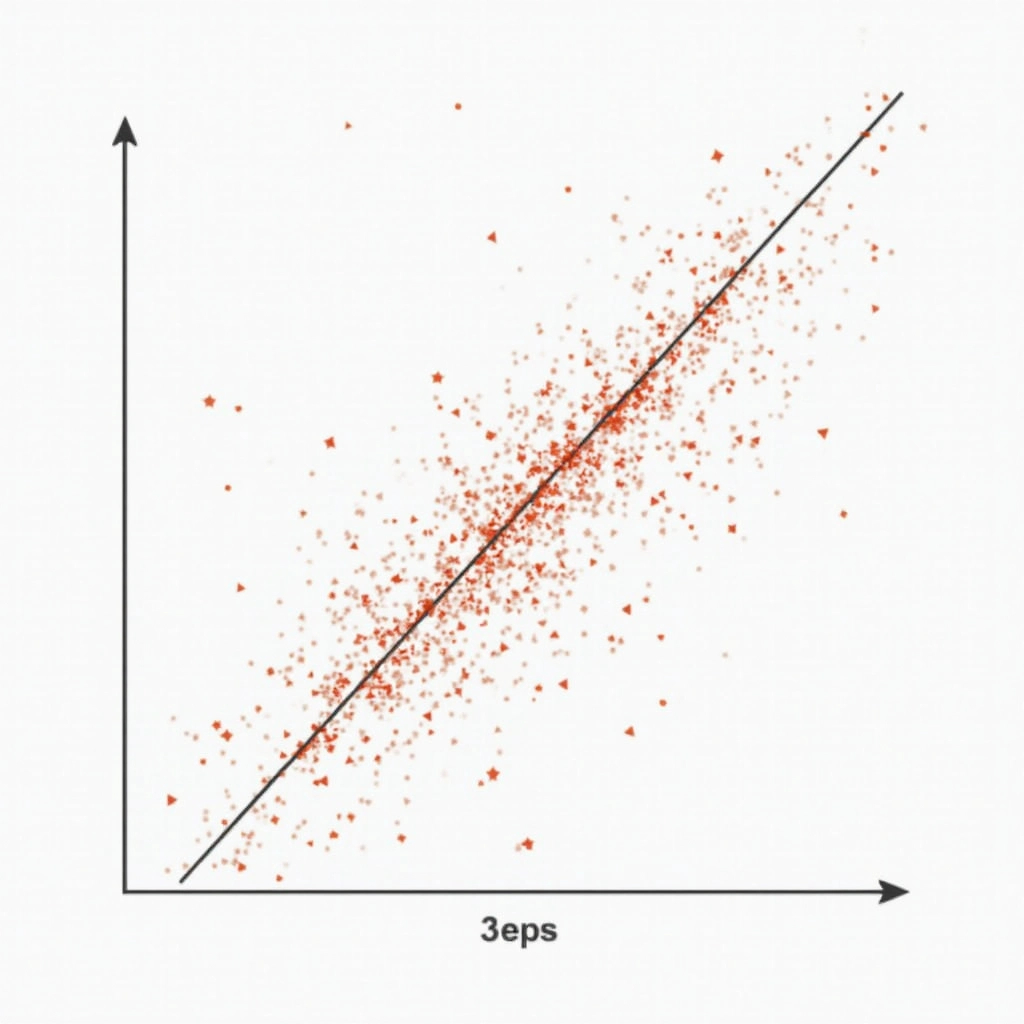

Linear Regression: Linear regression is a basic method in statistics used to predict a number based on another number. It finds the best straight line through a set of data points. This line shows the relationship between an input (like the size of a house) and an output (like its price). The simplest form uses just one input and one output, drawing a straight line to connect them. It’s useful when there’s a clear trend, such as larger houses costing more. This method helps in making predictions, like future house prices or stock values, by learning patterns from past data in a clear, easy way.

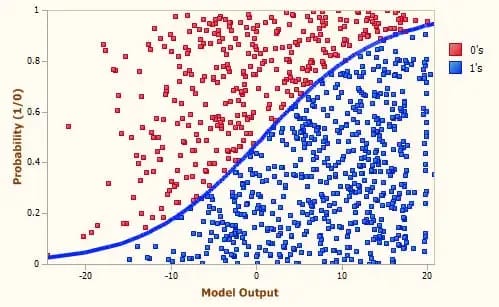

Logistic Regression: Logistic regression is a method used to predict outcomes that fall into categories, like “yes” or “no”. It works by looking at input features (like words in an email) and using a formula to estimate the probability of each possible outcome. If the probability is over a certain point (usually 50%), it chooses that category. For example, in spam detection, it looks at email features to decide whether it’s likely to be spam or not. It’s called “logistic” because it uses the logistic function, which turns any number into a value between 0 and 1—perfect for representing probabilities.

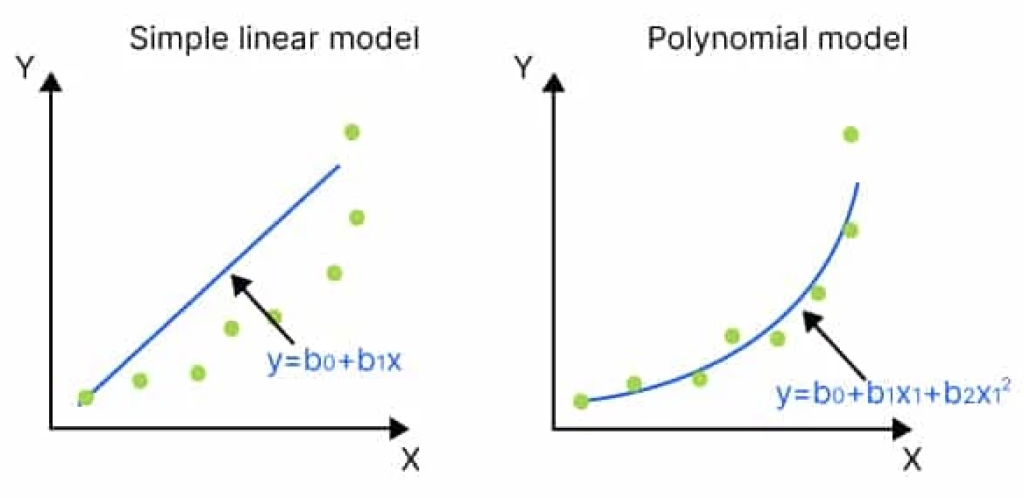

Polynomial Regression: Polynomial regression is a more complex form of regression that captures non-linear relationships between variables. In simple terms, it’s like drawing a curved line through data points instead of a straight one, helping to better fit patterns that aren’t straight. It does this by adding powers (like squared or cubed terms) of the input variable to the model. For example, instead of just using x, it also uses x², x³, and so on. This helps the model understand bends or curves in the data, making it more flexible and accurate for predicting trends that change shape over time.

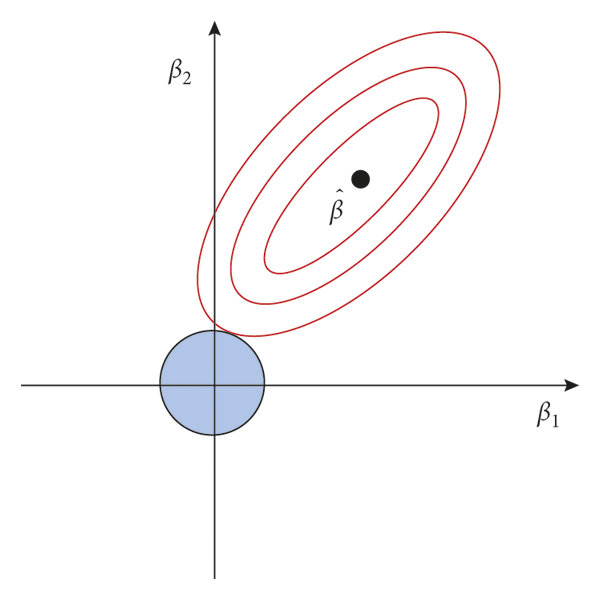

Regularised Regression (Lasso, Ridge): Regularised regression, like Lasso and Ridge, helps build better prediction models by stopping them from becoming too complex. In simple terms, these techniques add a penalty to the model when it tries to rely too much on any one variable. Ridge regression reduces all coefficients a little, while Lasso can shrink some to zero, removing them completely. This means the model focuses only on the most important patterns in the data. Over-fitting happens when a model learns noise instead of useful trends, and regularisation prevents this by keeping the model simpler and more general, leading to better performance on new data.

Normalisation and the Problem of Outliers

Regression techniques often aim to find a best-fit model that predicts outputs based on input data. However, this process can lead to the exclusion or misrepresentation of outliers—individuals or groups who do not conform to the statistical norm.

- Marginalised Communities: AI models trained using regression often optimise for the majority population, overlooking smaller subgroups. For example, a healthcare AI trained on average patient data may fail to account for rare genetic conditions that primarily affect minority groups.

- Edge Cases in Decision-Making: Financial AI models used for credit scoring may disregard individuals with unconventional financial histories, such as freelancers or gig workers, because they do not fit the traditional employment patterns that regression models prioritise.

- Algorithmic Fairness vs. Accuracy Trade-offs: Regression models typically prioritise accuracy across the majority class, often at the expense of minority groups. This can result in AI systems that work well for some but fail spectacularly for others.

The Ethical Consequences of Bias in AI

The failure to address bias in AI can have severe ethical and social consequences. Some of the most pressing concerns include:

- Discrimination in Automated Decision-Making: AI is increasingly being used for hiring, loan approvals, and legal decisions. If biased data is used, these systems can reinforce systemic discrimination instead of mitigating it.

- Lack of Accountability: AI decisions are often opaque, making it difficult to challenge unfair outcomes. Without transparency in AI models, affected individuals may have no recourse.

- Public Trust in AI: As cases of biased AI systems come to light, public confidence in AI decreases. Ethical AI development is crucial to ensuring that technology benefits society rather than harming it.

Addressing Bias and Ensuring Ethical AI

To create fair and ethical AI systems, developers and policymakers must take proactive steps to mitigate bias:

- Diverse and Representative Training Data: AI models must be trained on datasets that reflect the full diversity of the population.

- Bias Audits and Testing: Regular audits should be conducted to identify and correct biases in AI systems.

- Algorithmic Transparency: AI decision-making processes should be explainable and accountable.

- Incorporating Fairness Metrics: AI models should be evaluated not just for accuracy but also for fairness across different demographic groups.

- Human Oversight: AI should complement, not replace, human judgment, particularly in high-stakes applications like hiring and law enforcement.

Conclusion

AI has the potential to drive progress, but only if it is developed and deployed ethically. Recognising and addressing bias in training data is essential to ensuring AI serves all individuals fairly. By improving data collection, refining training methodologies, and maintaining human oversight, we can move towards AI systems that are not only intelligent but also just.